From the Launch of OpenAI's O1 Model Official Version: Examining 2025 AI Trends and Potential Investment Opportunities in Tech Stocks

2024/12/9

Where Are the Opportunities for Reinforcement Fine-Tuning Models in U.S. Software and Hardware Stocks?

Introduction:

Starting from December 5th, OpenAI is hosting a 12-day series of online demo conferences for the official release of its new model (o1). Today marks the second day of the event, and over the past two days, we've observed significant advancements in this AI language model compared to its predecessors. Notably, the reinforcement fine-tuning capabilities have made considerable progress, pushing the AI Agent into the second phase of reasoning development.

We anticipate that these advancements in reinforcement fine-tuning will have meaningful implications for investing in U.S. tech stocks in 2025. In today's analysis, we focus on how the reinforcement fine-tuning of the o1 official release might influence 2025 U.S. stock market investment strategies and highlight potential opportunities.

Source of OpenAI's 12 Days Demo Meeting Videos:

Day 1 Video: Official launch of the o1 release (including o1 Pro)

Day 2 Video: Highlighting the key difference of the latest model: Reinforcement fine-tuning

The highly anticipated Sora video production has not yet been released as of the completion of this article. However, it is expected that there may be an opportunity for its release during the 12 Days Demo Meeting. (There are already teaser-like videos hinting at its possible release.)

Conclusion:

1. The launch of the O1 model, with its emphasis on reinforcement fine-tuning capabilities, marks a significant step toward the second phase of AI Agent applications.

2. The most critical impact of the O1 model on U.S. corporations lies in narrowing the competitive gap between small-to-medium-sized enterprises and large corporations. This advancement is expected to drive significant growth in the performance of some SMEs due to AI-driven optimizations by 2025.

3. The software and hardware sub-industries present potential investment opportunities in 2025, with signs of these opportunities already emerging in this quarter’s earnings reports. Growth is expected to accelerate in the first half of 2025.

4. Promising sectors include financial engineering (finance), genomics, pharmaceuticals (biomedicine), and digital marketing.

5. Companies with strong data advantages, such as PLTR, will remain major winners. However, PLTR's current valuation is notably high.

Key Points and Highlights of the Video:

Introduced Reinforcement Fine-Tuning (RFT) technology, enabling users to train specialized models based on custom datasets. (Details on how it differs from standard fine-tuning using supervised learning will be discussed later.)

Combines RFT with reinforcement learning algorithms to enhance the model’s expertise in specific tasks.

Compared to supervised fine-tuning, RFT allows the model to learn new reasoning methods.

RFT requires only a few dozen examples to teach the model new reasoning techniques.

Currently available for testing by universities, researchers, and enterprises, with a public release planned for next year.

OpenAI stated that the model is applicable to fields such as law, finance, engineering, and insurance.

RFT is being supported for the first time on a model customization platform, making it suitable for scientific research and other specialized areas.

Breakthrough Applications of OpenAI’s O1 Model and Reinforcement Fine-Tuning Technology

OpenAI has officially launched the O1 model, featuring a groundbreaking capability of “deliberative reasoning,” which significantly improves the quality and logical consistency of responses.

From the O1 preview version to the official release, OpenAI introduced a revolutionary technique—Reinforcement Fine-Tuning (RFT). This allows users to train specialized models using custom datasets. Unlike traditional supervised fine-tuning, RFT integrates reinforcement learning algorithms, enabling the model not only to mimic input data but also to learn entirely new reasoning methods, vastly enhancing its application in specific tasks.

Core Advantages of RFT: Efficiency and versatility. With only a few dozen examples, the model can learn new reasoning techniques, significantly reducing training time.

OpenAI’s Collaboration with Thomson Reuters in Medical Applications for Rare Disease Research: Approximately 300 million people worldwide are affected by rare diseases, often requiring months to years to receive a diagnosis. Leveraging the reasoning capabilities of the O1 model and RFT, research teams can effectively combine medical expertise with systematic data reasoning to identify connections between symptoms, clinical signs, and pathogenic genes, drastically shortening the diagnostic timeline.

As RFT technology becomes more widely adopted, its applications are expanding into various fields, including biochemistry, AI safety, law, and healthcare.

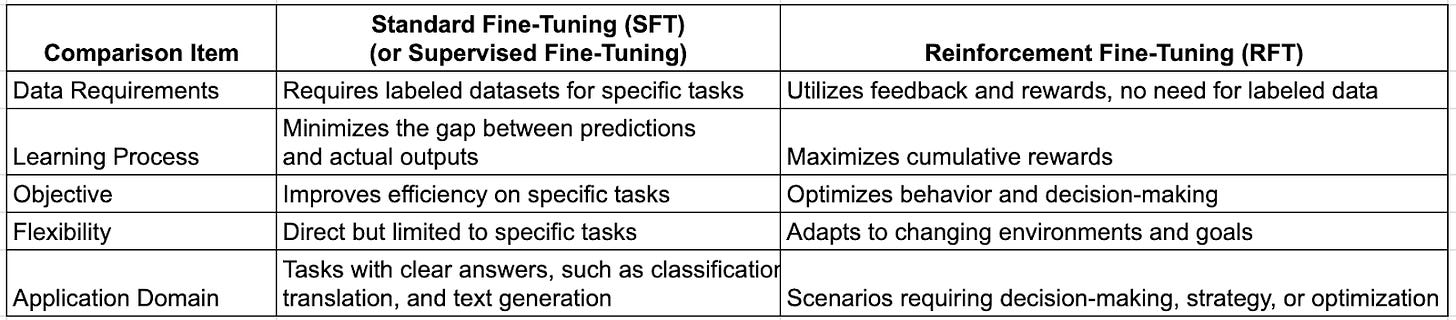

Differences Between Reinforcement (Enhanced) Fine-Tuning and Standard Fine-Tuning (Supervised Fine-Tuning):

The Impact of the New O1 Model on "Data Requirements":

Fundamental Differences in Data Requirements:

Standard Fine-Tuning (Standard Fine-Tuning):

Requires high-quality labeled data that is directly related to the target task (e.g., sentiment analysis requires sentences labeled with sentiment).

Typically needs a certain scale of labeled data (ranging from thousands to hundreds of thousands) to ensure the model can learn sufficient task-specific features.

Data quality is crucial for model performance—low-quality data may lead to overfitting or unstable results.

Reinforcement Fine-Tuning (Reinforcement Fine-Tuning):

Does not require traditional labeled data but instead relies on feedback data (e.g., scoring functions or human preferences).

Data Sources: Feedback can be derived from the following methods:

Requires professionals to score the model's outputs (e.g., RLHF for GPT fine-tuning uses human-labeled preferences for outputs).

Scores generated through business logic, simulated environments, or predefined rules.

Data Quantity: The required data volume is relatively small, as reinforcement learning focuses on "strategy improvement" rather than "feature learning."

Impact on the AI Industry and Future Prospects

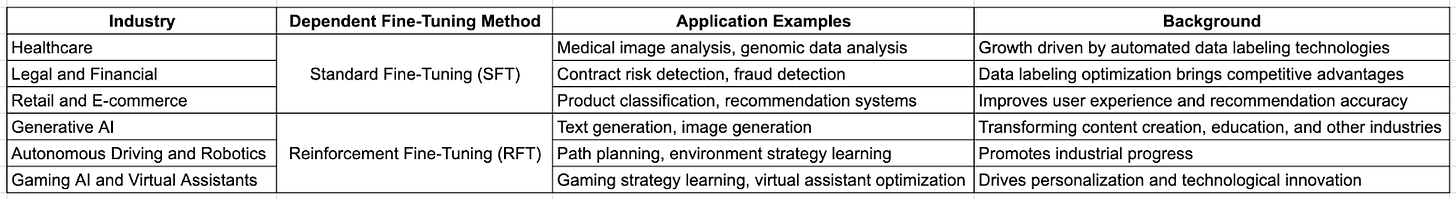

Standard Fine-Tuning is commonly used in scenarios requiring high-quality labeled data, such as medical image analysis, genomic data analysis, and contract risk detection or fraud detection in legal and financial fields. These applications rely on the automation of data labeling to enhance competitiveness.

Reinforcement Fine-Tuning, on the other hand, is suited for strategic tasks that depend on feedback signals, such as text and image generation in generative AI, path planning for autonomous driving, and strategy learning or virtual assistant optimization in gaming AI. It drives technological innovation and commercialization in these related fields.

Key Trends to Watch for Tech Stock Investment in 2025:

Software: Increasing data demands with a focus on greater specialization and refinement.

Hardware: Rising demand for computational resources, along with promising prospects in four key sub-industries: data processing and storage.

Part 1: The Demand for Reinforcement Fine-Tuning Technology in Software Companies

Industries Relying on Labeled Data (Benefiting from Standard Fine-Tuning):

Medical Health:

Legal and Finance:

Retail and E-commerce:

Industries Relying on Feedback Data (Benefiting from Reinforcement Fine-Tuning):

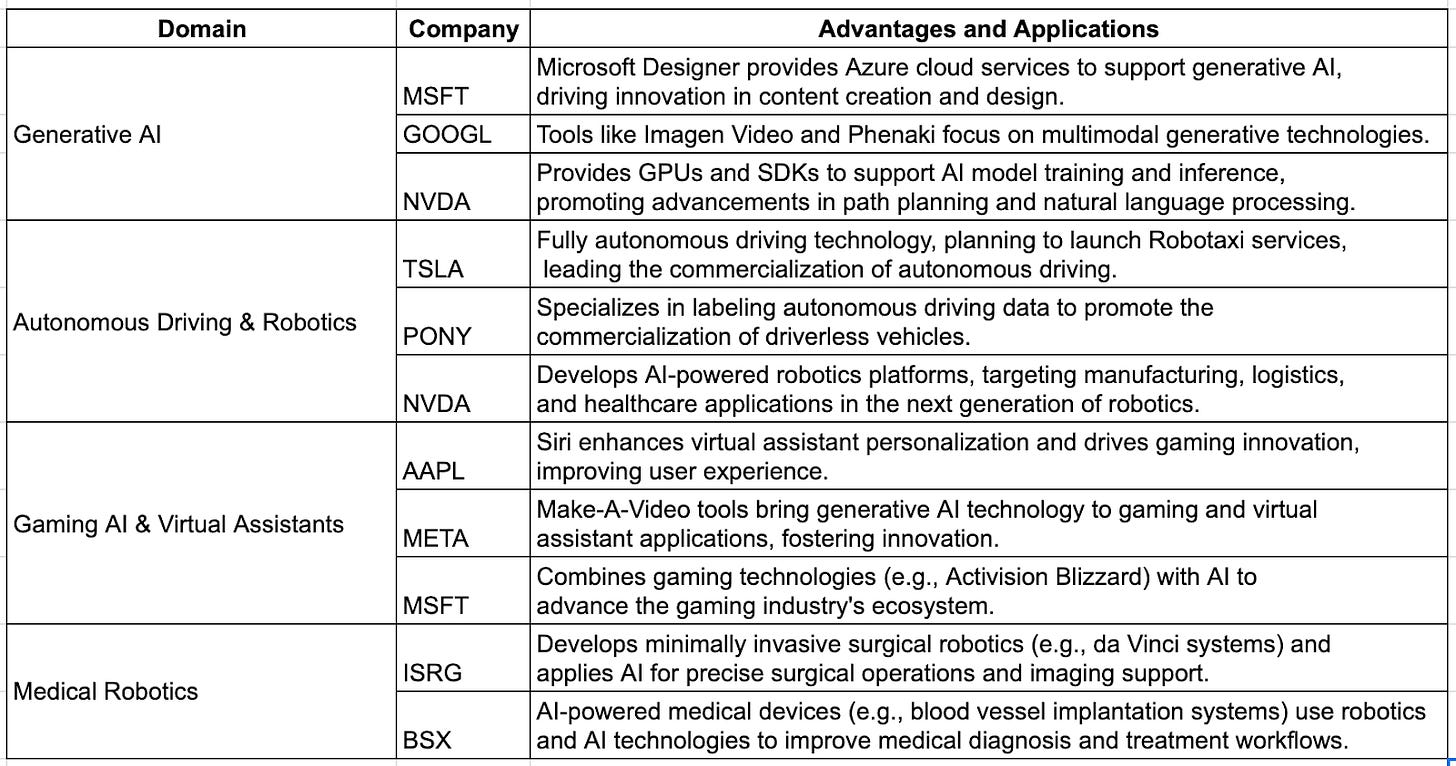

Generative AI: Advances in language models and multimodal generation technologies will significantly transform industries such as content creation, design, and education.

Autonomous Driving & Robotics: Technological breakthroughs may further commercialize autonomous driving and intelligent robotics (benefiting companies like TSLA and ISRG).

Gaming AI & Virtual Assistants: Innovations in gaming AI and personalization of virtual assistants will drive growth for related companies (benefiting AAPL and META).